Software Review

Blue Crab 4.9.5

Developer: Limit Point Software

Price: $25

Requirements: Mac OS X 10.4. Universal.

Trial: Fully-featured (10 days)

I love writing and found Blogger.com to be the right outlet for my passion. When I work on qaptainqwerty.blogspot.com, I do not need to worry about HTML syntax or other technicalities. I just need to concentrate on whatever topics that I want to rant about. The one downside is that the whole blog exists only on the Web. I have been looking for a way to archive my blog for posterity. I need a Web crawler, and Limit Point Software’s Blue Crab looks promising.

Standard Installation

Blue Crab’s installation is straightforward—download disk image, mount it, and drag the package to the hard drive. You do need to obtain a password from Limit Point Software, however, in order to use the software either for the ten-day trial or the full version.

Overview

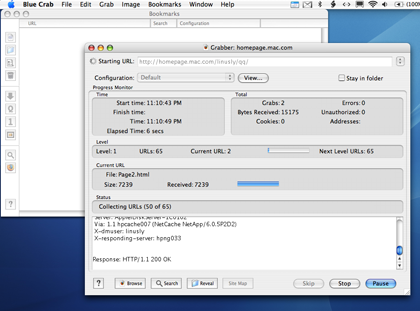

Web crawling is a serious job, and Blue Crab tries to make it easier. The most straightforward way is to select “Crawl URL” from the Grab menu. Enter a Web address and click Start, then off you go with the Default configurations. As Blue Crab crawls the URL, the log window tells you exactly what is happening. However, all that reporting slows down the process very much. By selecting “Grab Quickly,” you use a faster download technology, and there’s less reporting. For an even faster result, you can go with the “Download One Page” option but, of course, you will get just that one page. In all cases, you have the option of grabbing the URL from the Web browser it’s currently open in and not having to worry about mistyping. Once the Web site is downloaded, you can browse it or search through it with Blue Crab’s built-in search function.

The classic Crawl URL shows what’s happening with the download.

Prior to having the Blogger site, I had another site for exposing my still cartoons. I would draw the cartoons at work to entertain my colleagues, usually covering topics such as office politics or current tech news. I would next capture the drawings with a digital camera, then import them into iPhoto. A simple HTML export generated the Web gallery. Simple Web sites like this work well as either a one-page grab or a complete grab.

Options Galore

Chances are the Web site the typical user wants to grab is a complex one, with links to other Web sites, banner ads, and Flash animations. Blue Crab has many ways of helping with the grab, although the learning curve gets steeper from here. Probably the most obvious way is to limit the number of levels to dig into the site. Using my simple .Mac site as an example, http://homepage.mac.com/linusly/qq would be Level 0, then http://homepage.mac.com/linusly/qq/index-Pages would be Level 1, and so on. You can also limit the folder depth the crawling occurs at. Whereas the level is associated with HTML links, the folder depth is about the number of folders in any given URL. A folder depth setting of 3 would cause links such as http://www.blah.com/folder1/folder2/folder3/folder4 to be skipped.

Oodles of options to download the whole Web or not.

Level and folder restrictions are easy to implement, but other settings may not be so straightforward. For instance, to limit the crawl to files matching a certain criteria, the text to be searched has to be URL-encoded. Space characters must be replaced by %20 and the @ sign must be entered as %40, for example. The number following the percent sign is the hex value of the original character. Hex equivalents of everyday characters may be more meaningful to you if you have some programming experience. Supposedly there is a listing of all these so-called “unsafe characters” out there on the Web, but I have not stumbled across it yet other than some references to RFC 1738. It would be helpful if Blue Crab’s documentation included a full table of unsafe characters and their hex equivalents, or if it simply let you type the characters without URL encoding.

Without a doubt, Blue Crab is full of features to control how much you want to download from the Web. Most of the time, I can guess the uses for the various features. For other features, looking them up in Help Viewer clarifies the issue. Unfortunately, Blue Crab’s documentation does not keep up with its development. There are some features that are simply not mentioned in the documentation. I suspect the “Ignore dynamic URLs” option can be useful for skipping ads, but neither the documentation on the hard drive nor the online tour mentions it. Only the version history file, accessible as Release Notes in Blue Crab, refers to the feature. The online tour does include a section called Improving Speed, in which many Blue Crab options are discussed with regard to optimum content and fastest speed.

Another function that is covered only in the version history is imaging. At first, I thought it was related to grabbing images. It turned out to be a way to capture a Web site as a picture file, although I am not too sure how to use it. On the surface, it seems the end result is a JPEG file that mostly resembles what the browser showed at the moment. But then Blue Crab also opens a sort of Web browser window within which you can navigate the Web. I do not know what the purpose of Blue Crab’s browser window is. Bug or feature, I will have to wait for the documentation to catch up.

Blue Crab makes use of configurations to store combinations of the many settings. You start with a default configuration that is not changeable. Instead, you can copy the default configuration or create new ones. While it may be interesting to poke around with Blue Crab’s oodles of options, I think it would be more helpful if the software came with pre-set configurations. In addition to the default, perhaps there should be one for ignoring pictures and movies for faster download, another for optimal speed with all the settings as suggested by the online tour, and one for optimal content.

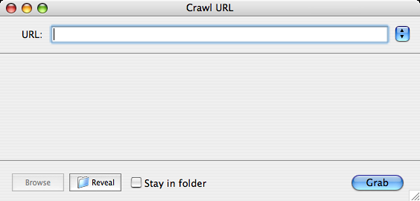

If having too many options turns you off, you can try Blue Crab Lite, which is just a straightforward crawler that allows you to manually control the downloading process. All you can do is pause and resume the crawling process, or stop it altogether. Limit Point offers Blue Crab Lite as one of its many utilities. Interestingly, with just one donation amount of $10, $15, $20, or $25 you can unlock all the utilities and receive their updates free, too.

Blue Crab Lite is the bare-bones version of Blue Crab, in which you have no control over the crawling process other than pausing or stopping it.

Verdict

Power and ease of use usually don’t go hand in hand. While anyone equipped with Blue Crab can easily start grabbing Web pages, making the most of the software requires an extra level of technical expertise. Not everyone can readily associate %20 with the space character or know the extensions for image files displayable on the Web. It does not help that the documentation is a few versions behind. Having reference materials in the documentation, perhaps as simple as a URL, would help the less technical users. Still, Blue Crab is a good tool to have for any Web-archiving endeavors.

Reader Comments (1)

Add A Comment